Get the logged in users Dynamics user record ID in a Canvas PowerApp

Tip #1186: Race conditions with queue items

Today’s tip is from Marius “flow like a river” Lind. (And you can also become a tipster by sending your tip to jar@crmtipoftheday.com)

What happens when a race condition happens in a queue? Let’s say we have a bunch of customer service representatives who are working on cases in the same queue. The queue is sorted by priority and you should always pick the top case. Joel and Marius opens up the queue view at the same time, but Marius is a second faster than Joel to pick the top case. What happens now?

Well, one out of two things.

- Marius chose to pick but not remove the item from the queue.

Joel picks the case, and since the queue item still exists the action taken by him simply resolves and the case is delegated to him. - Marius chose to pick and remove the item from the queue.

Joel is presented with the following error message![clip_image002 clip_image002]()

So how do we deal with this?

For scenario 1, here’s a few options:

- Accept that this might happen

- Create a plugin which prevents someone from picking something that’s being worked on

For scenario 2:

- Tell users about this, accept that it might happen

How to reduce the risk of this happening:

- Make sure you teach your users to refresh the list view if it’s been open for a while

- Distribute over several queues to prevent too many people working in the same queue

- Or maybe you have a better idea, drop it into the comment.

Best regards, your friendly neighbourhood Viking!

(Facebook and Twitter cover photo by davide ragusa on Unsplash)

Hands On with Microsoft Dynamics GP 2018 R2: Install eConnect on Client

Microsoft Dynamics GP 2018 R2 was released on the 2nd October. In this series of posts, I’ll be going hands on and installing the majority of the components; some of them, such as Analysis Cubes for Excel, which are little used, I won’t be covering.

Microsoft Dynamics GP 2018 R2 was released on the 2nd October. In this series of posts, I’ll be going hands on and installing the majority of the components; some of them, such as Analysis Cubes for Excel, which are little used, I won’t be covering.

The series index will automatically update as posts go-live in this series.

I already stepped through the installation of eConnect on the server, but eConnect is also needed on the client in order to use the eConnect adaptors in Integration Manager.

To install eConnect on a client PC, launch the setup utility and select eConnect under the Additional Products header:

Accept the terms of the License Agreement and click Next:

Check the Install Location and click Next:

An eConnect Service User needs to have details supplied (although without installing the services, isn’t actually required) and then click Next:

Enter the full SQL Server Instance name in the Server Name, confirm the Dynamics GP System Database name, select an authentication method and then click Next:

Click Install to begin the installation:

Once the installation is complete, click Exit:

Click to show/hide the Hands On with Microsoft Dynamics GP 2018 R2 Series Index

Read original post Hands On with Microsoft Dynamics GP 2018 R2: Install eConnect on Client at azurecurve|Ramblings of a Dynamics GP Consultant

MB6-898 Use the People workspace to view and edit personal information

Dynamics 365 Error Adding Product Bundles After Upgrade to Version 9

After doing a test upgrade of an 8.2 instance to Version 9, we received an error when adding product bundles to an opportunity where the bundle had existed in 8.2. When adding a bundle from the grid it would return an error with the code - 2147206380.

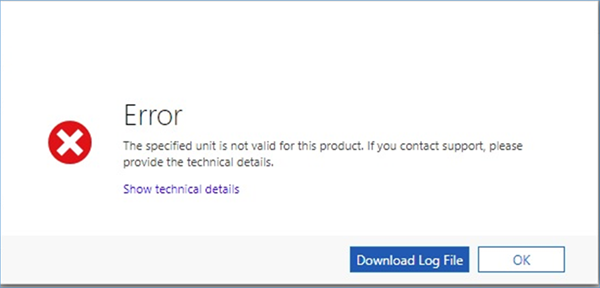

And from the Opportunity Product form it would return a Plugin error with the message ‘The specified unit is not valid for this product’.

And from the Opportunity Product form it would return a Plugin error with the message ‘The specified unit is not valid for this product’.

This did not affect new product bundles that we created in Version 9, even if they were identical to the existing bundles that could not be added.

We resolved this by updating the instance to version 1710 (9.0.2.1468).

Integrating Your ERP System With IoT – A Primer

The IoT (Internet of Things) has suddenly risen to prominence in the past couple of years with a lot of focus on getting business value from the data that is being generated by devices.

Gartner states that the “Internet of Things (IoT) is the network of physical objects that contain embedded technology to communicate and sense or interact with their internal states or the external environment”.

With the introduction of 5G network, IoT is going to get the sort of boost that data and broadband got after 3G & 4G networks. With this milestone in mind, companies across the world are gearing up for the plethora of opportunities that will come up.

ERP or Enterprise Resource Planning applications have been around for more than 25 years and continue to evolve and provide value with changing times. As per Gartner, ERP is defined as “the ability to deliver an integrated suite of business applications. ERP tools share a common process and data model, covering broad and deep operational end-to-end processes, such as those found in finance, HR, distribution, manufacturing, service and the supply chain. ERP applications automate and support a range of administrative and operational business processes across multiple industries, including line of business, customer-facing, administrative and the asset management aspects of an enterprise.”

What we are seeing now is collaboration of the old (ERP) and the new (IoT) to bring in tremendous benefits for the organization. Let’s talk about some use cases where ERP and IoT are working together –

RFID (Radio Frequency Identification)

This was perhaps one of the first sensor based devices that became a rage in the mid-2000s when Wal-Mart decided to have its top suppliers make their products RFID compliant. Essentially, RF tags are put on pallet, carton or even SKU (stock keeping unit) level to monitor the movement of the item in, out and across the warehouse or store can be tracked. So, the next logical thing that happened was that the information provided by these RF tags were integrated with ERP applications which made receiving, tracking movements and shipping out products completely automated.

Imagine a truck load of your product coming into the warehouse and your team being able to receive it and process it into your ERP in minutes. That is the sort of efficiency that RFID integrated with ERP implementation will give you.

Quality Processes in Manufacturing

Traditional quality processes have been dependent on sampling techniques to determine if products are being manufactured as per standards. Not only is this not accurate, it is also too late in the process. It creates inefficiencies and unproductive work at multiple levels and the use of IoT technologies integrated with ERP can significantly reduce these incidents.

For example, if you are able to catch any deviations from specifications as and when the product is being manufactured, you could easily stop the line and fix the issue. This would greatly minimize defective parts, if not completely eliminate it.

By introducing IoT technologies, the manufacturing equipment and the product line can be monitored 24X7. What if the production line supervisor notices a small deviation in actuator speed can cause a complete component misalignment? He could immediately take steps to prevent further production unless corrective steps are taken.

Fleet Management

One of the biggest beneficiaries of IoT and ERP integration have been the fleet management industry. Apart from the regular benefits like vehicle tracking & geo fencing, data passed from IoT sensors can really help validate and help achieve business goals.

As an example, each vehicle would already be managed as an asset in the ERP application with components listed and preventive maintenance schedules in place. What if the data from the on board diagnostics (OBD) of the vehicle is correlated with the maintenance data? Maybe the maintenance needs to be more thorough, maybe it can be delayed, maybe certain aspects of maintenance are being missed. Such invaluable data will not be possible without ERP & IoT applications working together.

Digital Supply chain

Supply Chains are being truly transformed by the use of IoT devices which are talk to ERP applications. The supply chain is no longer an array of opaque warehouses, stores, shipping terminals or trucks, but a constant barrage of movement providing you visibility at a SKU level if you so choose.

Imagine a situation where you are trying to figure out exactly when the components that you need to complete a product will arrive from China. Your MRP (Materials Requirement Planning) takes into account the time of arrival since the containers are tagged and are constantly sending information of their whereabouts. This can be a completely new avatar of the tried and tested JIT (Just in Time) concept.

ERP and IoT applications working together are in the process of transforming the way information is being received by us. The increase and benefits of ERP implementation in efficiencies that can be attained, are only limited by our imaginations…

Learn more about Captivix.

My Reports in GP

Today’s #TipTuesday post is about the My Reports functionality in Dynamics GP. There was a user question yesterday on the GPUG forums, where someone was asking how to modify their My Reports list. Great question! Here are some tips on getting reports on and off the My Reports list.

What is it?

My Reports is a section of Dynamics GP where a user can save reports they run frequently so they are one click away from running that report or Smartlist again (instead of searching through menus, for instance, to find it and set up their criteria).

You’ll find it on your home page if the “My Reports” pane is visible (check Customize This Page on your home page if it isn’t visible). If you’re a toolbar person, it’s on the “Standard” toolbar by default, but will not appear until you have at least 1 report in your My Reports section. Initially, it may look like this:

How to add a Smartlist – option 1

The first option, if you are creating a brand new Smartlist favourite, is to save it as Visible To: User ID. Smartlists “visible to user” automatically appear on your My Reports without doing anything else! I created a favourite in Financial > Account Transactions that is filtered to Document Status = Work. I’ve saved it under the name Unposted JEs.

Now, this is what My Reports looks like:

How to add a Smartlist – option 2

The second way to add a Smartlist to My Reports would be better if you already have saved favourites OR if your favourites are not saved “Visible to User ID”.

On any series navigation pane, there is a Smartlist Favourites option. Click on that to get a navigation list of all of the Smartlist Favourites in that series.

Mark a favourite you want on your My Reports pane and click Add To (in the My Reports section of the toolbar). Here’s another tip: when you add reports or Smartlists this way, you can rename the name of the item before you add it to your My Reports pane.

Now that I’ve added that, the My Reports list shows 2 reports.

How to add a “normal” GP report

The last option I’ll show you is adding a regular GP report option to the My Reports pane. I’ll use the PM HATB report as an example here, but any report option window will look substantially the same, for core GP at least (ISV products may not offer this).

Note: in this example, I am adding a shortcut to an aged payables report, which means clicking on it in My Reports will generate the report, not open this window. For that reason, you’ll want to ensure that you make your filters and parameters meaningful and generic. What do I mean? In this example, I want the Print/Age as of to be generic, not a hard-coded date. I’m choosing End of Previous Month as an example as that would be pretty common. If I also wanted a “real time” version, I would save another one with a different choice, say “Current Date”. Whatever I do, I don’t want the option “Enter Date” with a real date otherwise it uses that date every time. The exact same logic applies to Smartlists on My Reports.

After selecting a generic date range, and in my case no restrictions, I click on My Reports to save it. In keeping with my recommendation above, I am also editing the name to be clear about which date options I chose for this report. I want to know exactly what I will get when I click this link!

Now, I’ve got 3 reports on the My Reports pane. Notice how the reports are not sorting? Ya, there is no real workaround for that other than actually adding them in the order you want to see them. Not the best design choice, perhaps I’ll check out the ideas site to see if that is already a suggestion. Sorting alphabetically would be perfect, since you can rename the names any time.

How do I manage the My Reports section?

I’m glad you asked!

The easiest way to edit them is to click on the pencil icon in the My Reports pane on the home page. The alternative is on the Home pane, the shortcut bar by default has a Report Shortcuts section and My Reports is one of the options. Those are the 2 most obvious ways to get here although there are still other ways I won’t get into.

Renaming reports on My Reports

In the navigation list for your My Reports list, once you mark an option, the Rename and Remove From options light up on the toolbar (among other things that I won’t cover in this post). Here I am playing with sort options by naming things to see if I can force the My Reports to sort differently (spoiler alert: no luck).

Here’s the My Reports pane after renaming. Doesn’t it drive you completely batty that it’s not sorting alphabetically? But I digress…

Removing items from My Reports – option 1

The second thing you can do in the navigation list for your My Reports list is removing items. Here I chose one of my reports and selected Remove From, and it’s now gone.

Removing items from My Reports – option 2

For “Visible to User ID” Smartlists, there is a second option to remove a report from your list. In Smartlist itself, change the favourite Visible To option to something other than User ID and save it (via clicking the modify button) or you can remove the favourite entirely of course, if you wish.

Once you do this it is *supposed* to disappear from your My Reports list. In my testing, on GP 2018 R2 no less, it didn’t remove it until I switched companies and went back to where I had my reports set up. I refreshed the home page before changing companies and it still displayed the report on my list. Clicking on the report resulted in this error: “The report that you selected was not found in the My Reports list.”.

Sure enough, if I clicked on the pencil, the report was missing. I simply changed companies and went back and then it was gone as expected. That behaviour is likely a bug.

Takeaways

The key takeaways are this:

- My Reports is set up per user and company. This is unfortunate, if you use several companies and ideally would like many of the same reports or Smartlists in each.

- Visible to User smartlists automatically show up on your My Reports list. You can remove them from the navigation list for My Reports without removing or changing the Smartlist favourite if you don’t want that to show up.

- Use generic dates like “end of month”, “end of previous month” where you can for reports based on dates.

- There was an issue if you upgraded from a version prior to GP 2013 to GP 2013 or greater where there may be reports stuck in My Reports (the pane) but not visible to remove or edit them in the navigation list. I recall having to fix one or two user’s issues via SQL Script to remove them ultimately. If I find the script again, I’ll post a “part 2” to this next week. I may not have kept it after we upgraded as the issue was no longer relevant to me.

That’s the long and the short of this tip… I hope you find it useful!

D365 Quick Tip: Assign your records but keep control

GP Controller Series: Inventory Reconciliation: Stock Status vs Historical Aged Trial Balance

When reconciling inventory to GL in GP, there are two primary report options that companies use. Both of them have some pretty significant pros and cons. The two reports are the Historical Stock Status (HSS) report and the Historical Aged Trial Balance (HITB).

For a long time, all we had was the point in time stock status report in GP. To get this right, it needed to be run at month end. Miss the timing, and you were just stuck. Along came a historical version of that report that finally allowed going back in time, but HSS but it had its own issues. Enter the HITB, a report designed to tie to GL, but with a big implementation caveat.

Historical Stock Status is not designed to reconcile to the subledger. It may, and it’s often close, but the design of this report prevents a perfect reconciliation. For a long time, however, it was the only reasonable option. HSS starts with the current cost of an item multiplied by the quantity and then subtracts transactions to get back to the date selected. Working back to a date is the historical component. This report may not tie to the GL because it ignores the cost layers with that initial value. The current cost is really the last cost and often not representative of the full value.

For example, current cost is $100. Quantity is 11 units. The report would start with a valuation of $1,100. But in inventory, this product was bought in two purchases, one purchase of 10 units at $80. The second purchase was a single unit at $100.

$80 x 10 =$800 + $100 =$900 and $900 does not equal $1,100 from the HSS. This can work in both directions. Also, HSS works with transactions and does not take into account inventory cost adjustment. It’s usually close except when there are large price swings.

HITB was introduced with GP 10 and is designed to be the reconciling companion for inventory. However, for HITB to work, it required some data not previously present in GP, which means that it got additional tables. These tables were unpopulated by existing inventory transactions. For HITB to work, Microsoft provided an IV reset tool that would populate the tables from existing data but it required resetting all the inventory cost layers to a new starting layer. For some companies, this would result in a significant change to their inventory valuation and they were unwilling to do that.

HITB collects data whether the reset tool is used or not, it’s just not accurate if there are pre-HITB items still in inventory. For most folks, this should no longer be a problem since HITB was released with GP 10 in 2007, but there are some long inventory manufacturers out there (think Christmas trees, aged bourbon, etc.) along with plenty of people who do a poor job cleaning out obsolete inventory.

So here is the bottom line.

- If your company first implemented GP with GP 10 or higher, use the HITB.

- If your company ran the IV Reset Tool somewhere along the way, use the HITB.

- If every item in inventory has turned at least once since 2007, you should be good with HITB.

- If you are on a version prior to 10, the only option is HSS.

- If your company started with GP prior to GP 10, you have not run the IV reset tool, and you still have a few leftover items in inventory, consider running the tool to evaluate how much your inventory would change. The tool provides this information prior to finalizing anything. If the number is small enough, consider resetting the inventory layers to be able to use HITB.

One more note, both of these reports are often requested in Excel. They are both very hard to get from GP into any kind of reasonable format in Excel. The best native option is to use the SSRS versions and export them, but even that requires a lot of clean up.

The best option is to purchase and use Historical Excel Reporting to run these reports directly in Excel.

Links to all the posts in this series can be found at http://mpolino.com/gp/gp-controller-series-index/

Alternate Key Manager XrmToolBox Tool posted and some lessons learned…

New View Designer is in Public Preview

The UI Team is hard at work delivering an improved user experience in the entity customization process. They have just moved the View Designer into public preview.

If you want to give it a try, open a D365CE or CDS environment that you have been working with from this URL – https://preview.web.powerapps.com

Then navigate to Data > Entities > Accounts > Views (or any other entity of your choice). When you click on a view in the list, it will now open up in the new View Designer.

In the upper right hand corner of the screen you will see the “Save” and “Publish” buttons.

“Save” is a split button with “Save as” as an additional action. “Publish” now performs both a Save and a Publish. Whoo! hoo!!!.

The team will be adding a tooltip and learning callout to help clarify that Publish is both Save & Publish. For all the old timers it is going to be a hard habit to break from, not clicking on Save and then Publish.

This is just one of many improvements in the new design process.

The upcoming Form Designer will have the same behavior when it reaches public preview in the next month.

The post New View Designer is in Public Preview appeared first on CRM Innovation - Microsoft Dynamics 365 Consulting and Marketing Solutions.

Microsoft Dynamics GP 2018 R2: Monthly and Bi-Monthly Recurring Batches

The latest trends in the mobile crane market

Since the world’s population is growing, there is also a rising demand for the construction of houses. Major investments are taking place in the construction industry. Increased investments in real estate show that this is a growing market. Governments all over the globe are renovating their infrastructure after maintenance came to a complete standstill during the financial crisis. All these projects are causing an increased demand for heavy machinery and construction equipment. Mobile cranes are very versatile and extensively used in various projects in shipping, the oil gas industry, power plant construction, rail and road building, and the construction of dams.

In the Asia-Pacific region there is a large number of emerging countries. Combined with the high rate of growing urbanization and industrialization, it’s not surprising that this is one of the leading mobile crane markets. The European crane rental market is also significant, but not as large as the Asia-Pacific region. The Middle Eastern and North American markets, however, are quite saturated due to the declining demand for oil over the past few years.

Growing demand

When it comes to mobile cranes, we’re talking about several different types: all-terrain cranes, rough-terrain crawlers, truck cranes, crawler cranes, and others. All these types of cranes are used in a variety of industries. One of the largest industries that use mobile cranes is the mining industry. It uses very large cranes for the excavation of raw materials from open-cast mining fields. It’s not the fastest growing industry, but it has existed for a very long time and is still growing gradually. Therefore, there will always be a high demand for these cranes. Over the years the need for crane rental software has increased as well.

DynaRent has piqued the interest of several crane rental companies. First of all, this is because it is a proven equipment rental solution, but also because several crane rental companies already use it successfully. Curious to know more about our solutions? Send us an email at info@highsoftware.com or visit our website www.highsoftware.com

Synchronisation des contacts avec Microsoft Outlook

Playbooks for Dynamics 365 Activity Templates

In my previous post I explored the current Dynamics 365 Customer Engagement solution update practices and used the Playbooks feature as an example. Here’s a quick overview of what the actual Playbooks offer.

The official MS documentation, “enforce best practices with playbooks”, gives you a list of what the initial October ’18 release of Playbooks contains. The feature is essentially a way for a sales manager to determine a set of activities that sales users should perform when a real life event takes place that the playbook contents has been designed for. A checklist, if you will.

To get started, you’ll need to have the Sales app upgraded to a recent enough version, so that the Sales Hub UCI app displays Playbook Templates under the App Settings:

Notice that you won’t find these anywhere in the legacy web client (“classic UI”). One thing you might want to do first via that legacy client, though, is to ensure that the associated roles for Playbook Manager and Playbook User are assigned to the required user accounts.

To kick things off, you could create examples of Playbook Categories for grouping your playbooks, since that’s a compulsory lookup on the Playbook Template form. The actual configuration work will all take place on the template, where you’ll first of all specify the record types that the playbook applies for. Right now it’s only lead, opportunity, quote, order, invoice, so don’t plan on using playbooks for any custom entities or other Dynamics 365 Apps than Sales.

The template shows a subgrid of Playbook Activities, which look pretty much like your regular activities on the surface. They are a separate entity, however, as this is how you define the parameters for an activity (task, phone call or appointment). You have the usual subject, description, duration etc. fields you’d find on a normal activity, but instead of fixed dates you give them relative due dates, calculated from when the playbook is launched.

How you actually launch a playbook is via the applicable entity form. Let’s say we have created a Playbook Template for the opportunity entity and activated it. When navigating to an opportunity record we’ll see a “Launch Playbook” button on the Command Bar. This gives a list of playbooks the user has access to, with the option to launch one of them.

This creates the actual activities for presumably the user who runs the “Launch Playbook” action. At least the owner of the associated opportunity didn’t have any effect, so all the activities end up on my own My (Open) Activities view:

The one thing to pay attention to here is that nowhere in the resulting activity does it actually say what the related opportunity was to which the playbook was launched. Instead the Regarding field points to the Playbook record, which essentially is an instance of the Playbook Template. Sure, here on this form you see the regarding opportunity, but for anyone working via their My Activities view this isn’t really ideal.

It’s understandable why this is the case, though: you just can’t set the Dynamics 365 activities to have multiple Regarding records. If you want to track the progress of the associated activities, then they need to be grouped under the Playbook (instance). This does give you metrics like the start date and estimated close date of the playbook, number of total vs. completed activities. There’s also a status value for the playbook itself, which you can complete as “successful”, “not successful”, “partially successful” or “not required”.

Personally I don’t find this structure of having the resulting activities hidden away behind the Playbook record very user friendly. You can’t see the Playbook entity anywhere in the main Sales Hub sitemap, it’s just a related record for the main business record, or for the Playbook Template. If we have open activities that are about working on a particular opportunity but you don’t actually see them in the opportunity Timeline, then did they ever even take place? Sure, you could try and build a subgrid with deep queries FetchXML to get over the 2 hops in the relational data model, but that’s a bit of a stretch.

The quicker way to simplify things is to go on the Playbook Template and set the field “Track Progress” to “No”. What this will do is generate all the activities directly regarding the business record. So, when launching the playbook for a record like lead, all of its activities will be found directly in the lead form’s Timeline. Also your sales users will directly see which lead they should be completing the calls, tasks or appointments for.

That’s pretty much all the features I was able to discover when playing around with Playbooks. If you need to create a fixed set of activities for fixed types of entities (5 currently supported ones) on a regular basis, then this can sure be a handy feature to have around. It’s not all that advanced in its current format, as there doesn’t seem to be any obvious way to automate the launching of Playbooks when a data driven event takes place. That may be something we’ll see in the future, though, since a feature like this would surely fit well into the AI demos of how to automate your sales activities when a machine learning algorithm somewhere in the cloud detects that a customer is in danger of discontinuing their service contract, for example.

Another thing to consider is how these Playbooks relate to the task lists you may have earlier defined via Business Process Flows. The checkboxes on BPF stages are certainly a more structured way to present things that must get done, but they suffer from the fact that they are just a checkbox on a record somewhere. Playbooks can produce actual activities that will sit on the user’s ToDo list until they get completed, with notifications and all the usual activity integration. Given how the new Unified Interface presents the BPF control as collapsed by default, thus hiding the fields defined for the BPF stages, these are less and less likely to be noticed by Dynamics 365 users. You do have more control over enforcing a business process via BPF fields, though, whereas Playbooks in their current form appear more as a guidance for actions the user should complete – as long as they’re aware that there’s a Playbook waiting to be launched.

The post Playbooks for Dynamics 365 Activity Templates appeared first on Surviving CRM.

The Field Service Sessions

Friends don’t let friends compromise security: Extending cybersecurity to supply chains

Network attacks are on the rise, and as with all things security, it’s always best to get ahead.

This summer, the Wall Street Journal ran a story about Russian hackers infiltrating “air-gapped” networks of US utility companies with “relative ease” as just one part of a tale that’s becoming all too common. The NY Times described another scenario where a security researcher with Cyber Security firm UpGuard found tens of thousands of sensitive documents from major auto manufacturers planted on the open internet, including engineering plans, factory schematics, contracts, and non-disclosure agreements. And though they’re not cyber attacks per se, we also saw serious data privacy breaches with Facebook via Cambridge Analytica and Google via the Google+ API bug.

What remains consistent throughout these incidents and the countless others like them? Each one resulted from the exploitation of weak security by third parties like partners, suppliers, and customers. Whether intentional acts by bad actors, or through insufficient data controls, these incidents are always a possibility.

As the NYT article lays out:

“Many of the worst recent data breaches began with a vendor’s mistake. In 2013, thieves infiltrated Target’s payment terminals and stole credit and debit card information from 40 million customers. The attackers got in by hacking one of Target’s heating and ventilation contractors, then using information stolen from that business to gain access to Target’s systems.”

A November 2017 study of CISOs from Ponemon Institute found that only 22% of organizations hold their business partners, vendors, and other third parties to high security standards. Even more astonishing is that this comes in spite of the fact that over half the companies surveyed had suffered a breach as a result of a vendor’s lax security posture. In life-critical areas of our economy, such as the utilities sector, regulators are recognizing exactly how big of a risk this is. Just this week, the Federal Energy Regulatory Commission (FERC) approved new cybersecurity standards for supply chain risk management inclusive of documented management plans, electronic security perimeters, where vendors operate, and configuration change management and vulnerability assessments.

As Microsoft partners, many of you are directly involved in assessing, mitigating, and implementing security solutions for our mutual customers. Microsoft now has over 100 Co-Sell Ready third-party security solution offerings from our partner community, including Cloud Security Assessments, Advanced Threat Analytics onboarding, Secure Score implementations, real-time spear phishing and cyber fraud defense, EMS Managed Services, MFA deployment, training, and many others. However, how often do you expand the security work you do with our customers through their extended supply chain? How often do our customers even consider this at all?

Selling on fear isn’t always the best approach, yet sometimes, we notice complacency with our customers who have yet to experience the agony of a serious data breach loss. Customer obsession can sometimes make for uncomfortable conversations about the risk our customers are opening themselves up to. By not taking the appropriate protective action for their environments, all the way through to a partner’s IT environments, there is a legitimate risk factor that our customers need to know about. But what if establishing a strong defense beyond the customer’s IT estate is impractical or impossible? How should our customers respond then?

Matt Soseman, one of our security-focused Cloud Solution Architects posted an excellent summary back in July of our client security services: Preventing a data breach, avoiding the news, and keeping your job. In it, he details the importance of treating Identity as the new perimeter. It doesn’t just matter how tightly you’ve locked down your network and data, if your identity is compromised, that protection is meaningless. I absolutely suggest taking a closer look at this piece.

Our recommendation to partners involved in a security conversation with our customers, whether it is fundamentally a security engagement or as a consideration of a larger project, is to follow these three steps:

- Offer cyberattack simulations for your customer’s vendors on behalf of your customer. Office 365 Attack Simulator is a great example of a tool we use internally at Microsoft as well.

- Require Multi-Factor Authentication for any third-party organization that touches your customer’s systems/data. Azure Multi-Factor Authentication is a great solution for Office 365 and thousands of other cloud SaaS offerings.

- Enable Conditional Access via Azure Active Directory and combine with a robust policy for vendor access to privileged customer data.

These techniques and many more are detailed in Matt Soseman’s blog as well as through our Modern Workplace Webcast series, which you can register for here.

Scott Emigh is the Chief Technology Officer for Microsoft’s US One Commercial Partner (OCP) organization. With an extensive background in tech and solution sales, Scott leads a national team of Solution Architects, Evangelists, and Strategists all focused on developing and enabling our US partner ecosystem – ISVs, System Integrators, Managed Hosters, and Volume Channel. Our mission at Microsoft remains steadfast – to empower every organization on the planet to achieve more. Our partner ecosystem is at the forefront of bringing this powerful mission to life. OCP will work to transform ourpartner ecosystem and simplify the programs and investment structure for our partners to drive growth and profitability. We will provide the programs, tools, and resources you need to build and sustain a profitable, successful cloud business.

Will there be 2018 ACA compliance relief for employers?

Historically, the IRS has provided relief to US employers with regards to ACA compliance by:

- Allowing a “good-faith effort” as it relates to ACA Form 1095-C provided to employees and the IRS.

- Extending the filing deadline for providing forms to employees by 30 days.

See our post on last year’s relief here.

So the big question is: Will the IRS do the same for 2018 forms? Maybe.

The IRS Information Reporting Advisory Committee (IRPAC) published a report in October requesting that the IRS apply this relief to 2018 forms as well:

“A. Reporting by Insurance Companies and Applicable Large Employers under IRC §6055 and §6056 IRPAC would like to thank the IRS for adopting several of our prior year recommendations dealing with §§ 6055 and 6056 during tax years 2017/2018 including the extension of good faith efforts penalty relief for reporting of incorrect or incomplete information reported on 2017 Forms 1095-B and Forms 1095-C filed in 2018.

IRPAC recommends good faith efforts penalty relief for reporting of incorrect or incomplete information reported on returns, as well as a 30-day delay for furnishing forms due January 31, 2019, is extended to the 2018 Forms 1095-B and Forms 1095-C filed in 2019.”

There were some other topics addressed by IRPAC around ACA as it pertains to US Employers:

1. The Tax Cuts and Jobs Act has set the individual penalty for not having ACA qualified health insurance to zero effective with the 2019 calendar year. IRPAC has requested that the IRS provide guidance on the implications of this change as soon as possible.

“In addition, IRPAC recommends that IRS issue guidance as soon as practical regarding changes, if any, to reporting requirements due to the reduction of the Individual Shared Responsibility Payments to $0 for calendar year 2019.”

2. The final ACA topic addressed in the IRPAC report relates to challenges that employers have experienced when changing ACA service providers. Currently, if forms are submitted by a particular service provider, they cannot be corrected by a subsequent service provider, should the employer want to change providers.

“Finally, as the compliance environment for ACA reporting becomes more mature, IRPAC recommends that the IRS adopt new procedures for submission of prior year corrections through the AIR system by which a new transmitter will be able to submit corrections that were previously transmitted by a filer’s prior service provider.”

As always, Integrity Data endeavors to be your partner in staying compliant with the Affordable Care Act. Continue to follow our blog as we obtain more information in this area.

SiteMapName in the AppModuleSiteMap is null or empty error while importing V9 Solution in Dynamics 365 Customer Engagement

Create LedgerDimension from main account and financial dimensions

In the example below, there is an account structure with MainAccount-BusinessUnit-Department segments and an advanced rule with Project segment.

class TestLedgerDim

{

publicstatic DimensionDynamicAccount generateLedgerDimension(

MainAccountNum _mainAccountId,

str _departmentId,

str _businessUnit,

str _projectId)

{

DimensionAttributeValueSetStorage dimensionAttributeValueSetStorage

= new DimensionAttributeValueSetStorage();

void addDimensionAttributeValue(

DimensionAttribute _dimensionAttribute,

str _dimValueStr)

{

DimensionAttributeValue dimensionAttributeValue;

if (_dimValueStr != '')

{

dimensionAttributeValue =

DimensionAttributeValue::findByDimensionAttributeAndValueNoError(

_dimensionAttribute,

_dimValueStr);

}

if (dimensionAttributeValue.RecId != 0)

{

dimensionAttributeValueSetStorage.addItem(dimensionAttributeValue);

}

}

DimensionAttribute dimensionAttribute;

whileselect dimensionAttribute

where dimensionAttribute.ViewName == tableStr(DimAttributeOMDepartment)

|| dimensionAttribute.ViewName == tableStr(DimAttributeOMBusinessUnit)

|| dimensionAttribute.ViewName == tableStr(DimAttributeProjTable)

{

switch (dimensionAttribute.ViewName)

{

casetableStr(DimAttributeOMDepartment):

addDimensionAttributeValue(dimensionAttribute, _departmentId);

break;

casetableStr(DimAttributeOMBusinessUnit):

addDimensionAttributeValue(dimensionAttribute, _businessUnit);

break;

casetableStr(DimAttributeProjTable):

addDimensionAttributeValue(dimensionAttribute, _projectId);

break;

}

}

RecId defaultDimensionRecId = dimensionAttributeValueSetStorage.save();

return LedgerDimensionFacade::serviceCreateLedgerDimension(

LedgerDefaultAccountHelper::getDefaultAccountFromMainAccountRecId(

MainAccount::findByMainAccountId(_mainAccountId).RecId),

defaultDimensionRecId);

}

/// <summary>

/// Runs the class with the specified arguments.

/// </summary>

/// <param name = "_args">The specified arguments.</param>

publicstaticvoid main(Args _args)

{

// Prints 110110-001-022-000002

info(

DimensionAttributeValueCombination::find(

TestLedgerDim::generateLedgerDimension(

'110110',

'022',

'001',

'000002')).DisplayValue);

}

}